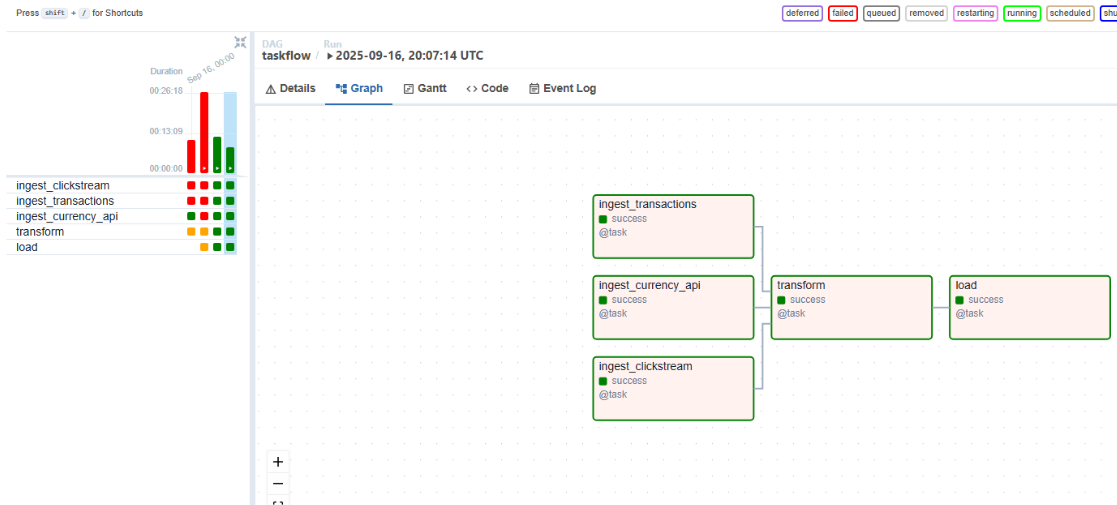

This project was part of a micro-internship in data-engineering, the primary purpose of the project was to learn Google Cloud Platform and some of the data products they offer. We started by building basic ETL scripts in Python to extract, transform and load to GCS. Then building upon that we developed Apache Airflow DAGs to schedule these tasks to run once a day. We then migrated the old basic python scripts to using PySpark and SQL for transformations, enabling us to run these scripts of large clusters of machines through GCP DataProc. Finally we loaded the data into BigQuery partitioned tables, then performed some basic queries and analysis on the data.